At Endor Labs, our mission is to help application security teams cut through the noise and fix what matters. That often means reducing false positives from legacy tools. But it also means catching false negatives — high-impact security changes that never trigger an alert in the first place.

Today, we’re introducing AI Security Code Review, a new capability that helps AppSec teams move beyond reactive vulnerability management. Built on the next generation of Endor Labs’ application security platform, it uses multiple AI agents to review pull requests for design flaws and architectural changes that affect your security posture — like new API endpoints, modifications to authentication logic, or the collection of new sensitive data.

Why traditional tools miss real risks

Most application security tools are built around rules and signatures. Static analysis security testing (SAST) detects known anti-patterns and insecure functions. Software composition analysis (SCA) flags vulnerable packages with CVEs. Both are important — but they operate at the level of isolated files or functions, and assume the risk is recognizable from a fixed rule.

What they miss are changes to the architecture or behavior of your application — especially when those changes are technically valid, but shift your security architecture in meaningful ways.

For example:

- A new API endpoint is introduced without authentication

- An OAuth 2.0 flow is modified, breaking CSRF protection

- New PII is collected without triggering a data processing review

- TLS configuration is changed to use a nonstandard crypto library

These issues may often map to known CWEs — like CWE-306 (Missing Authentication), CWE-352 (CSRF), or CWE-327 (Broken Crypto) — but go undetected by rule-based tools because the surrounding context is missing. Without understanding how components interact or why a change matters, static rules alone can’t flag the risk.

AI-generated code is making the problem worse

The problem is getting worse. AI-generated code is accelerating development — today 75% of developers are using AI coding assistants like GitHub Copilot and Cursor. But those AI coding assistants aren’t always secure. A recent study found that 62% of AI-generated code solutions contain design flaws or security weaknesses, even when using state-of-the-art LLMs.

At the same time, developers are becoming less involved in the fine details of the code they ship. AI coding assistants can introduce subtle changes to authentication, data flow, or infrastructure — changes that may look valid and clean, and even pass your SAST scan, but have real implications for security.

Catching these issues requires context: understanding what changed, how it fits into the broader system, and why it matters. Manual reviews can sometimes catch them — but not at the scale or speed of modern development.

How AI Security Code Review works

AI Security Code Review is built on Endor Labs’ agentic AI platform — designed specifically for application security teams. It combines purpose-built agents, security tools, memory, and deep knowledge of code to deliver precise, contextual analysis at scale.

AI Security Code Review analyzes every pull request using a system of AI agents acting as developer, architect, and security engineer. These agents work together to progressively analyze, summarize, categorize, and prioritize changes to your application’s security design and architecture.

- Developer agent — summarizes what changed in the code diff

- Architect agent — categorizes changes using a security-focused ontology

- Security agent — evaluates the impact on overall security posture and assigns a priority level

This layered reasoning moves beyond pattern-matching or keyword rules. It evaluates changes in the context of your application: how code is structured, what data is handled, and how changes interact across different files in the pull request.

Because the system summarizes what changed and why it matters, it also gives AppSec engineers the context they need to review unfamiliar codebases. That’s especially useful when supporting dozens of teams or reviewing code across hundreds of microservices — where no one can be an expert in every repo.

What it detects

AI Security Code Review identifies and categorizes pull requests based on how they affect your security architecture. The agents categorize changes by domain and assign each change a priority level (Critical, High, Medium, Low) based on context and potential impact.

The chart below covers the different types of categories we detect:

| Category | Example |

|---|---|

| AI | Prompt injection |

| API endpoint | New public API released |

| Access control | Authentication method changed |

| CI/CD | New CI/CD workflow created |

| Configuration | Update to Terraform, configuration files |

| Cryptographic | Switched the cryptographic library for TLS |

| Database | Database schema changed |

| Dependency | Introduce dependencies with privileged access |

| Input validation | Added SQL injection method |

| Memory management | Made changes to memory allocation in C |

| Network | Modify network security controls |

| Payment processing | Changed integration with Stripe |

| PII data handling | New personally identifiable information (PII) collected |

Each PR summary includes the affected areas, impact, and a rationale for why the change matters — even when it doesn’t look like a typical vulnerability.

Real-world examples

Traditional SAST tools often miss critical security changes because they don’t match defined patterns or rules. These false negatives are some of the most dangerous, and AI Security Code Review is built to catch them. Here are some real-world examples from our testing:

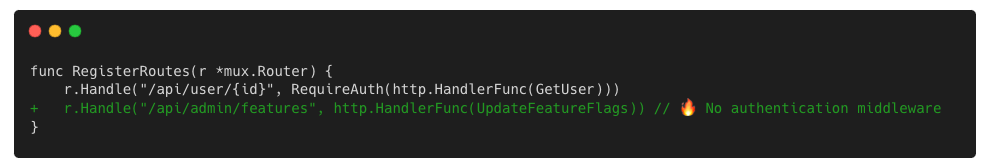

New admin API endpoint without authentication

A developer adds a new API endpoint to manage feature flags — but skips authentication. It’s functionally correct, but introduces a critical access control risk.

Go application where a developer introduced a new API endpoint without authentication.

Why this was a false negative: There’s no insecure function or known bad pattern. The code is valid, and no rule is violated. But the architectural implication — unauthenticated access to admin functionality — is critical.

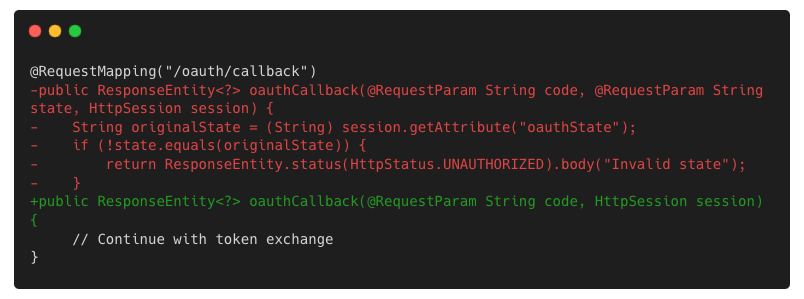

SSO flow modified, breaking CSRF protection

To simplify login, a developer removes the state parameter from an OAuth 2.0 flow. The app still works — but it silently breaks a key CSRF protection.

Java application where a developer removed the state parameter

Why this was a false negative: The change looks benign: no dangerous API call, no invalid logic. But it introduces a security regression that only shows up with context of how OAuth should be implemented.

AES key compiled into binary

An AES key is base64-encoded and stored in a static config class, then accessed through a helper method. Easy for the dev. Easy for an attacker with the binary.

Developer stores an AES key as a base64-encoded string

They access they key from another file using a helper method

Why this was a false negative: The key wasn’t flagged by secrets detection or SAST because it wasn’t hardcoded in a typical way. Without multi-file reasoning, it looked like safe config handling — but the binary leaked the key.

Get started

It’s easy to deploy and roll out AI Security Code Review using the Endor Labs GitHub App. Once enabled, you’ll start getting insights for new PRs created across the projects in your GitHub organization.

Insights from AI Security Code Review will appear in a dedicated Security Review section within each project. Each finding includes information about the developer submitting the pull request and any assigned code reviewers, so you know exactly who to reach out for an in-depth review.

AI Security Code Review is integrated with Endor Labs’ flexible policy engine and works with your existing source code management (SCM) tools and project management systems. That makes it easy to automate reviews and workflows — for example, creating tickets for the appropriate stakeholders based on the type of change.

For example:

- GRC teams for compliance-related reviews and PII handling

- DevOps teams for infrastructure and configuration modifications

- Security champions for in-depth code review by engineers familiar with the project

Conclusion

AI Security Code Review helps application security teams focus their efforts where it matters most — identifying the few code changes that introduce real security risk, even when they don’t match known vulnerabilities or weaknesses.